QA Bottlenecks vs. AI Solutions

AI QA automates test creation, self-heals flaky tests, expands edge-case coverage, and cuts maintenance and costs to speed releases.

QA Bottlenecks vs. AI Solutions

Manual testing is slowing down software teams, but AI-driven QA tools are changing the game. Here's why:

- Manual QA Challenges: Testing cycles can take days, cost up to $250,000 annually, and rely heavily on repetitive work. Human errors, flaky tests, and slow scalability worsen the problem.

- AI-Powered Solutions: Platforms like Rock Smith automate test creation, self-heal broken tests, and reduce regression times by 90%. They also cut costs by up to 80% and expand test coverage to 95%.

- Key Benefits: Faster feedback, fewer bugs, reduced maintenance, and scalable testing without increasing headcount.

AI isn't just improving QA - it's transforming it into a faster, smarter, and more efficient process.

How QA Teams Scale Test Automation with AI

sbb-itb-eb865bc

Common QA Bottlenecks in Manual Testing

Understanding the challenges of manual testing is key to appreciating how AI solutions, like those developed by Rock Smith, can reshape QA workflows.

Manual Test Creation and Maintenance

Creating and maintaining manual tests is a major drain on QA resources. For instance, a manual regression cycle involving 400–800 test cases can take anywhere from 65 to 130 hours. This makes it nearly impossible to keep up with the rapid pace of daily or weekly agile deployments. The problem is compounded by the fragility of scripts that rely on unstable locators and XPaths. Even small changes - such as renaming a button or altering a form layout - can break these scripts, forcing teams to spend hours fixing them instead of expanding test coverage.

Flaky Tests from UI and Code Changes

Modern front-end frameworks frequently alter the DOM, which can cause locators to fail even with minor updates. According to Google, about 1.5% of all test runs are flaky, and nearly 16% of tests exhibit some level of flakiness. The financial impact is staggering - large enterprises lose over $2,200 per developer, per month, chasing down flaky test results.

"The DOM changes out from under you. Agent takes a snapshot, decides to click a button, but while it was thinking, a loading spinner appeared and shifted the layout. Click lands on the wrong element. Test fails." – Test-Lab.ai

This fragility is further complicated by timing and synchronization issues. For example, tests often try to interact with UI elements before they are fully loaded or while a loading spinner is still visible, leading to race conditions. Microsoft’s internal systems revealed around 49,000 flaky tests, which could have caused approximately 160,000 false failures if not addressed. Research also shows that 75% of flaky tests are problematic from the moment they are introduced.

Limited Edge Case Coverage and Scalability

Manual testing efforts scale directly with the amount of work involved - doubling the test cases means doubling the time or the number of testers needed. On average, a manual tester can execute only about 50 test cases per day. This pace is far too slow for complex applications with thousands of scenarios. The sheer variety of browser environments, data combinations, and edge cases makes it impossible to achieve full manual coverage.

"It is nearly impossible for manual testers to cover every possible user journey and edge case, leaving applications vulnerable to unexpected failures." – Marcus Avangard, Engineer & Entrepreneur

Manual testing also suffers from human limitations like fatigue and inconsistency. One tester might log a bug that another might dismiss as "expected behavior." On top of that, the process involves extensive documentation to record steps, observations, and results, which can further slow things down. As applications grow more complex, these challenges create bottlenecks that delay releases and undermine quality. These issues highlight the urgent need for AI-driven automation to streamline QA workflows.

How AI Solves QA Bottlenecks

AI-powered platforms are reshaping how quality assurance (QA) is handled by automating workflows, reducing the time spent on maintenance, and broadening testing scope. By late 2025, 81% of development teams had already integrated AI into their testing processes.

AI-Driven Test Creation and Semantic Targeting

One of the most tedious aspects of manual testing is creating and maintaining test cases. AI steps in by automatically generating test cases based on natural language inputs like user stories, requirements, and acceptance criteria - cutting out the need for manual brainstorming. For instance, Rock Smith's platform uses semantic targeting to interpret the intent of a test. Instead of relying on fragile CSS selectors or XPaths, it understands commands like "click the login button" and can locate the element even if the underlying code changes.

This approach slashes maintenance time. Traditional QA teams typically spend 30–40% of their time maintaining tests. With large language models (LLMs), test cases achieve a success rate of 70–90%, depending on the complexity of the application. Plus, the cost of AI-generated test suites is impressively low - around $0.005 per user story. Rock Smith's semantic targeting also enables tests to adapt automatically when UI elements change, eliminating the need for manual updates.

"AI transforms QA from a bottleneck into an accelerator." – Eugenio Scafati, CEO, Autonoma

Another game-changer is the ability for non-technical team members, like product managers and designers, to create and validate test flows using no-code AI tools. This removes the reliance on coding expertise, making QA more accessible to a wider range of contributors.

Self-Healing Tests and Edge Case Automation

One of the biggest challenges in automated testing is dealing with flakiness caused by changes in UI or code. AI-driven platforms address this by using machine learning to detect broken locators and update them automatically. By analyzing historical data, these tools find the closest match based on attributes like visible text, position, and tag structure. This self-healing capability has a 94% success rate in fixing invalid selectors and can reduce test failures by up to 90%.

Rock Smith's AI agents also tackle timing and synchronization issues. The platform identifies repetitive patterns or infinite loops during execution and adjusts by retrying with a different context or escalating to a more advanced model. This reduces flakiness rates in end-to-end regression testing to just 8.3%, compared to nearly 16% in traditional test suites.

AI further excels in covering edge cases - those tricky scenarios that often go unnoticed by human testers. It identifies data-driven issues like numeric overflows, environmental problems like network latency, and behavioral anomalies such as out-of-sequence workflows. Rock Smith's edge case generation even suggests negative scenarios, like malformed inputs or session timeouts, expanding testing beyond the usual "happy path".

"AI is not a replacement for structured testing - it is an amplifier that enhances dynamic test coverage by exploring the edges humans often miss." – Sukhleen Sahni

These adaptive capabilities make testing more efficient, while also improving the accuracy of results.

Real-Time Execution and Local Browser Testing

AI takes automation a step further with real-time execution and local browser testing. Rock Smith’s platform uses AI agents to monitor the current state of the UI during tests, enabling them to handle timing issues, unexpected pop-ups, and browser-specific quirks that often cause traditional scripts to fail. By staying in sync with the browser state, these agents avoid the pitfalls of relying on outdated snapshots.

Distributed execution powered by AI is another major advantage - it’s up to 10× faster than running tests sequentially. Tests can be distributed across multiple regions and devices, speeding up feedback loops. For sensitive data, Rock Smith supports local browser execution, while its diff-aware prioritization ensures only tests affected by recent code changes are run, further accelerating the process.

AI also improves failure analysis. It identifies root causes with 90–94% accuracy, compared to just 20% for manual methods. This precision reduces triage time significantly - from 20 minutes to just 6 minutes in some B2B SaaS implementations. By focusing on real issues rather than false positives, teams can avoid the costly "flaky tax", which costs large enterprises over $2,200 per developer each month in wasted time.

Traditional QA vs. AI-Powered Solutions

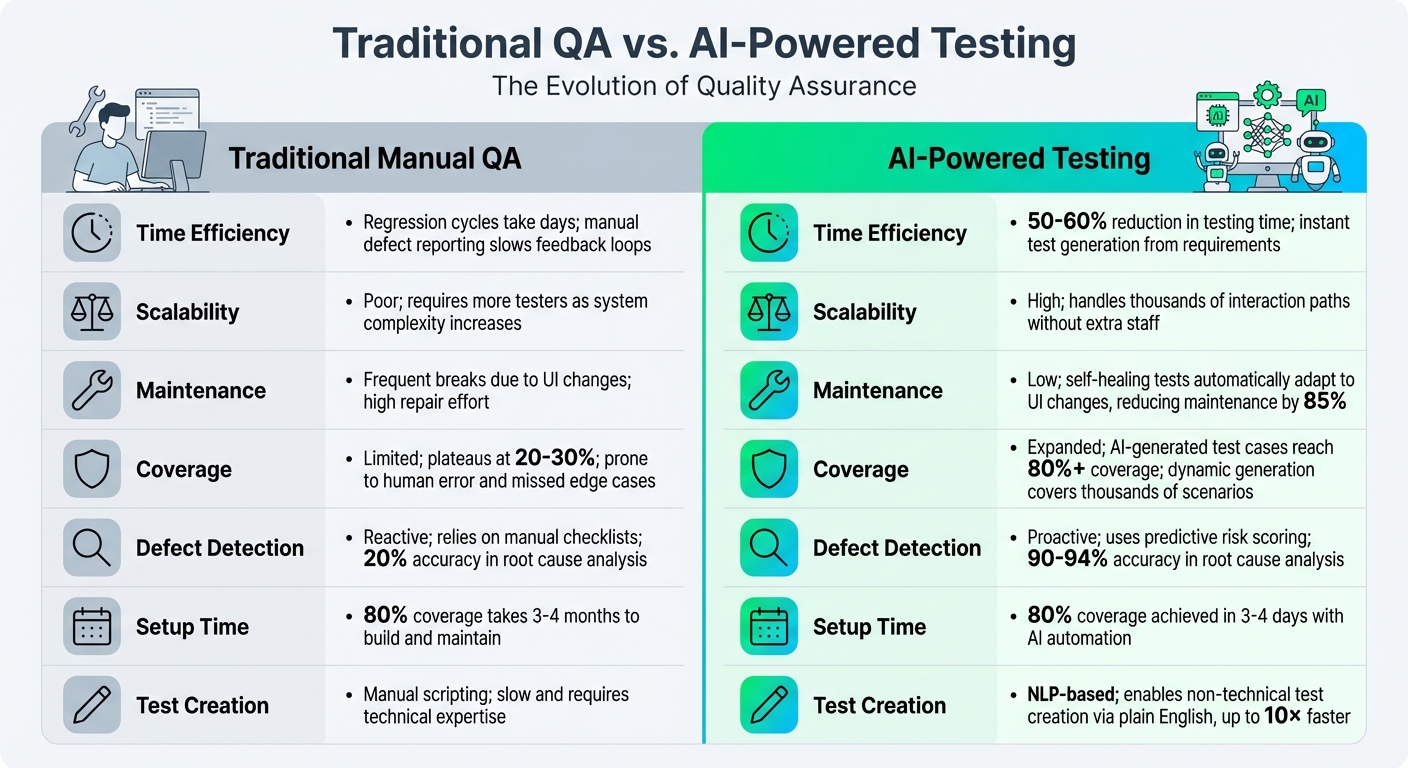

Traditional QA vs AI-Powered Testing: Key Metrics Comparison

Manual workflows in QA come with their own set of limitations, but AI is changing the game by redefining efficiency and scalability in quality assurance.

The transition from traditional QA to AI-driven testing marks a major shift in how teams ensure product quality. In traditional setups, human testers are responsible for writing, executing, and verifying tests - an approach that grows increasingly cumbersome as product complexity rises. Scaling such processes manually becomes impractical.

AI-powered testing flips this model on its head. Instead of increasing headcount to manage the growing number of interaction paths and microservices, AI scales effortlessly without additional staff. The results are striking: AI-assisted QA can slash manual testing efforts by 50% and reduce overall testing time by up to 60%. Furthermore, machine learning algorithms improve defect detection rates by 30% to 50% compared to traditional methods.

"Quality assurance in 2025 is far more advanced and integrated than it was ten years ago. A decade ago, QA teams mostly relied on manual test execution, step-by-step checklists and basic automated scripts." – Duong Nguyen Thuy, SmartDev

Traditional automation has its own challenges - scripts often fail when UI changes, forcing teams to spend 60–80% of their time fixing broken tests. AI solutions, such as Rock Smith, address this with self-healing capabilities that automatically repair broken locators, cutting maintenance efforts by 85%. This allows QA engineers to focus on more impactful tasks rather than continuously troubleshooting test scripts.

The advantages of AI-powered testing over traditional methods are clear when comparing their efficiency, scalability, and ease of maintenance. The table below highlights these key differences.

Comparison Table: Bottlenecks, Manual QA, and AI Solutions

| Feature | Traditional Manual QA | AI-Powered Testing |

|---|---|---|

| Time Efficiency | Regression cycles take days; manual defect reporting slows feedback loops | 50–60% reduction in testing time; instant test generation from requirements |

| Scalability | Poor; requires more testers as system complexity increases | High; handles thousands of interaction paths without extra staff |

| Maintenance | Frequent breaks due to UI changes; high repair effort | Low; self-healing tests automatically adapt to UI changes, reducing maintenance by 85% |

| Coverage | Limited; plateaus at 20–30%; prone to human error and missed edge cases | Expanded; AI-generated test cases reach 80%+ coverage; dynamic generation covers thousands of scenarios |

| Defect Detection | Reactive; relies on manual checklists and human focus; 20% accuracy in root cause analysis | Proactive; uses predictive risk scoring to find bugs earlier; 90–94% accuracy in root cause analysis |

| Setup Time | 80% coverage takes 3–4 months to build and maintain | 80% coverage achieved in 3–4 days with AI automation |

| Test Creation | Manual scripting; slow and requires technical expertise | NLP-based; enables non-technical test creation via plain English, up to 10× faster |

Conclusion

AI-driven testing is reshaping quality assurance by automating tedious workflows and reducing the burden of repetitive maintenance tasks. This shift not only simplifies processes but also brings measurable savings in both time and cost.

Rock Smith takes a fresh approach by replacing fragile, selector-based scripts with visual intelligence and semantic targeting. Its self-healing features automatically adjust to changes in code and UI, cutting test breakage rates down to just 3–5%, compared to the usual 30%. The platform also autonomously generates edge cases, covering boundary values and security scenarios like XSS and SQL injection - no manual brainstorming required.

Teams leveraging Rock Smith have reported a dramatic 50–80% drop in manual testing efforts and the ability to complete regression suites up to 90% faster. For example, a digital payments provider that adopted autonomous testing in October 2025 saw their release frequency triple, defect rates fall by 64%, and annual savings reach around $220,000.

Features like local browser execution and natural language test creation further boost team flexibility, turning QA into a strategic advantage.

FAQs

How can AI-powered QA tools help cut testing costs?

AI-powered QA tools cut down testing costs by automating essential tasks such as test creation, execution, and maintenance. This approach reduces the reliance on manual labor, speeds up testing cycles, and eliminates many time-consuming processes.

By simplifying workflows and quickly detecting issues, companies can slash testing expenses by as much as 80%. These tools also adjust to application updates, lowering the ongoing costs of maintaining test cases and ensuring efficiency over time.

How does AI help address flaky tests in QA workflows?

AI plays a key role in addressing flaky tests by enabling self-healing test suites. These suites can automatically adjust to UI changes, keeping tests stable and dependable. This means fewer false failures, less need for manual fixes, and improved accuracy across the board.

With AI, QA teams can reclaim time, work more efficiently, and concentrate on the most important elements of software quality - rather than getting bogged down by inconsistent test results.

Can team members without technical skills create tests using AI?

AI simplifies the process for non-technical team members to create tests. By allowing users to describe test flows in plain, everyday language, AI tools can transform these descriptions into detailed, actionable test steps. This method encourages teamwork between technical and non-technical teams, making the QA process smoother and ensuring that everyone can play a meaningful role.