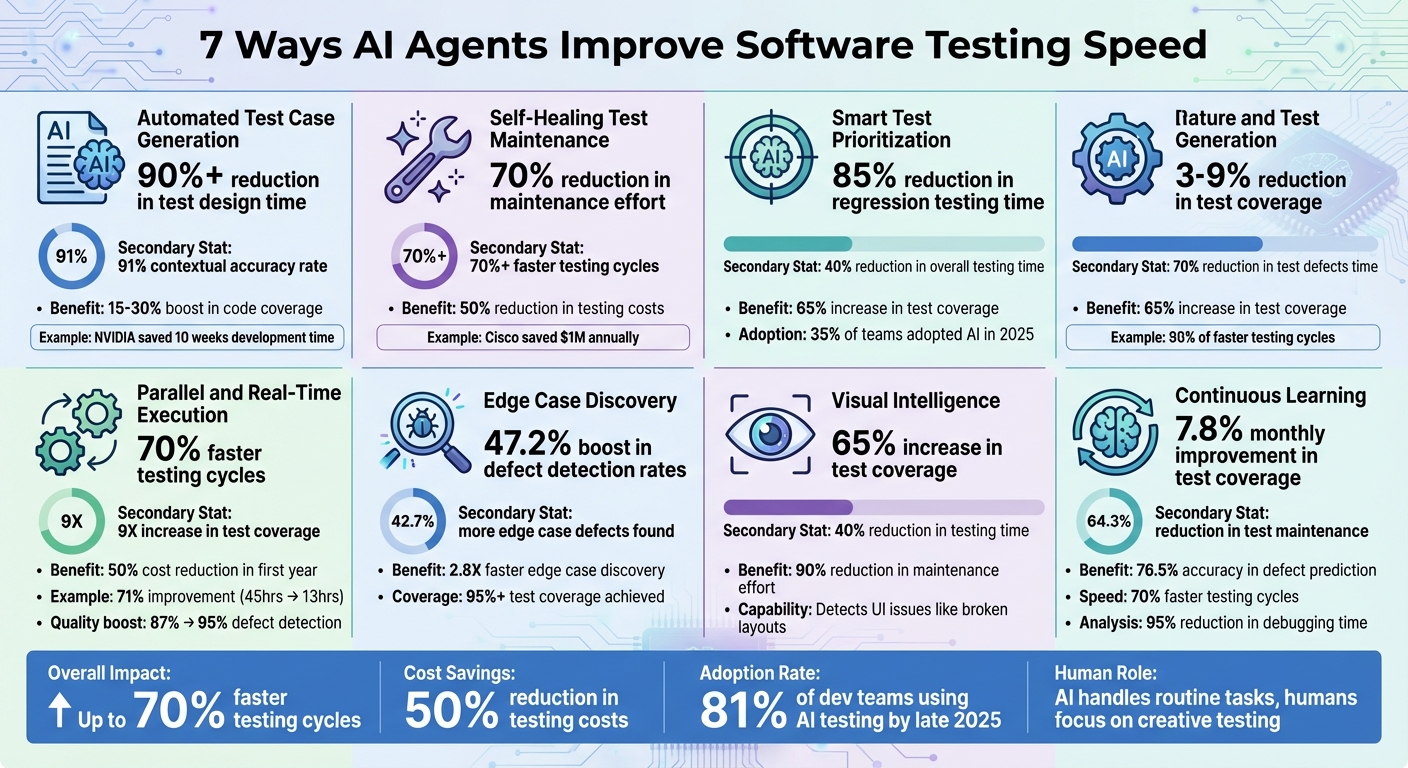

7 Ways AI Agents Improve Software Testing Speed

AI agents speed up QA by auto-generating tests, self-healing scripts, prioritizing high-risk cases, running parallel checks, and finding edge cases.

7 Ways AI Agents Improve Software Testing Speed

AI agents are transforming software testing by automating repetitive tasks and reducing testing cycle times. They help QA teams deliver faster, more accurate results while addressing common challenges like test maintenance and limited coverage. Here's how AI is making a difference:

- Automated Test Case Generation: AI creates test cases from project documents using NLP, saving time and reducing errors.

- Self-Healing Test Maintenance: Automatically updates scripts when UI changes occur, cutting maintenance efforts by up to 70%.

- Smart Test Prioritization: Focuses on high-risk areas, reducing regression testing time by up to 85%.

- Parallel and Real-Time Execution: Runs multiple tests simultaneously, slashing testing cycles by up to 70%.

- Edge Case Discovery: Identifies bugs in unscripted workflows, boosting defect detection rates by 47%.

- Visual Intelligence: Detects UI issues like broken layouts using computer vision, increasing test coverage by 65%.

- Continuous Learning: Learns from every test cycle to refine strategies and improve defect detection accuracy.

AI-powered testing tools not only speed up software development but also improve quality and reduce costs.

Compared to traditional testing methods, which often involve repetitive manual work, platforms like Functionize's next-gen AI testing tools automate tasks such as test case generation and script maintenance, significantly reducing time and errors. These AI-driven solutions also enhance test coverage, helping teams detect more defects in less time. Unlike manual testing, which can miss edge cases, AI tools adapt and evolve with each test cycle, ensuring a more thorough and efficient process.

While AI handles routine tasks, human testers remain crucial for creative and user-focused testing.

Overview

1. Automated Test Case Generation

Automation of Manual Processes

Creating test cases manually is both time-consuming and prone to mistakes. Engineers often spend countless hours poring over project documents, trying to convert them into structured test scenarios. AI agents are changing the game by using Natural Language Processing (NLP) to handle this process automatically. They can analyze project documents, extract the intended behaviors, and generate test cases that are ready to execute.

For instance, AI systems can take these documents, interpret their intent using NLP, and produce executable test cases in formats like Gherkin or Playwright scripts. A great example of this comes from October 2024, when NVIDIA's DriveOS team utilized the HEPH framework, spearheaded by Max Bazalii. This framework automatically translated requirements into C/C++ test cases, slashing development time by as much as 10 weeks.

"HEPH uses an LLM agent for every step in the test generation process - from document traceability to code generation. This multi-agent system enables the automation of the entire testing workflow and saves engineering teams many hours." – Max Bazalii, Principal System Software Engineer, NVIDIA

Reduction of Testing Cycle Time

Automating test case generation not only speeds up the creation process but also significantly shortens the overall testing cycle. AI-driven tools have been shown to reduce test design time by over 90%. This efficiency is especially critical for teams committed to continuous delivery, where delays in testing can slow down the entire pipeline. Beyond speed, these tools deliver contextual accuracy rates as high as 91%, ensuring the generated tests are not just fast but also relevant and dependable.

Improvement in Test Coverage and Defect Detection

This AI-powered approach doesn’t just save time - it also makes testing more thorough. Automated test generation broadens test coverage by identifying scenarios that might be missed manually, such as edge cases, negative tests, and error conditions. Studies reveal that adopting AI-driven testing can boost code coverage by 15% to 30% compared to manual methods. This is because AI agents can examine the entire application, from the user interface to API endpoints, and create tests for scenarios that would otherwise be impractical to cover.

Additionally, these AI tools maintain a direct connection between each test case and its original requirement, ensuring every requirement is addressed. This "digital thread" makes compliance reviews and audits much simpler, as it guarantees 100% requirement coverage.

2. Self-Healing Test Maintenance

Automation of Manual Processes

When your application's UI gets updated - whether it's a button moving, an element ID changing, or a workflow being adjusted - traditional test scripts often fail instantly. Engineers then have to spend countless hours manually fixing these scripts, updating locators, and sorting through false failures. Self-healing AI agents, however, take a different approach. By using computer vision and natural language processing (NLP), these agents detect UI changes - like a "Login" button being renamed to "Sign in" - and automatically update test scripts in real time.

Take Cisco, for example. In June 2025, they implemented UiPath's Autopilot for Testers to simplify their quality assurance process. Thanks to its self-healing capabilities, Cisco reduced their testing efforts by nearly 50%, saving over $1 million annually. This was achieved by automating manual test conversions and enabling real-time script adjustments. The result? Faster test cycles that keep pace with evolving, dynamic environments.

Reduction of Testing Cycle Time

Self-healing capabilities don't just fix scripts - they speed up the entire testing process. When UI elements, APIs, or workflows change, these AI agents dynamically update scripts, eliminating the need to pause testing to fix broken code. Organizations using AI testing have reported a 70% reduction in maintenance effort compared to traditional frameworks. On top of that, AI-driven test execution can make testing cycles over 70% faster. This means teams can focus more on finding real defects instead of wasting time on maintenance.

"Modern AI solutions don't just run tests faster - they reduce maintenance burdens that have been challenging teams since the Selenium days." – Joe Colantonio, Founder, TestGuild

Adaptability to Dynamic Environments

Self-healing AI agents go beyond fixing issues - they anticipate them. By analyzing historical data, these agents use probabilistic models, like Bayesian networks, to predict potential failures and adjust scripts proactively. For instance, if a specific feature becomes unstable, the agents can pause related tests and focus on validated areas, giving release managers cleaner, more actionable insights. This kind of context-aware decision-making shifts testing from being reactive to proactive.

"Agentic AI can automatically adapt to interface changes, recognizing elements based on context rather than rigid selectors to minimize test maintenance efforts." – Bill Woodford, Sr. Managing Director Digital Transformation, Auxis

Improvement in Test Coverage and Defect Detection

Self-healing isn't just about keeping existing tests functional - it makes them better. By eliminating false positives caused by broken scripts, self-healing agents ensure that failures reported during testing are actual defects and not maintenance errors. This keeps test suites effective throughout the development process. AI agents also boost test coverage by 9X, generating edge cases and scenarios that human testers might overlook.

The financial benefits are just as impressive. Companies using AI-native testing have seen a 50% reduction in testing costs thanks to self-healing and reduced maintenance. By late 2025, around 81% of development teams had already integrated AI into their testing workflows. These advancements highlight how self-healing capabilities are reshaping modern QA practices, making them faster, smarter, and more efficient.

AI-Powered Test Automation: Self-Healing + Visual Testing - Selenium & Playwright

3. Smart Test Prioritization

Building on self-healing processes, AI now takes test prioritization to the next level, making test execution faster and more efficient.

Automation of Manual Processes

Manually prioritizing tests used to be a tedious and time-consuming task. QA teams had to sift through test suites to figure out which tests were critical for specific releases. AI eliminates this hassle by leveraging advanced tools like Graph Neural Networks (GNNs), such as Graph Attention Networks, to analyze code dependencies and historical data. These AI agents go a step further by examining real-time data from test logs, APIs, and UI states. This allows them to identify the tests most relevant to recent code changes and determine which tests can run simultaneously across different environments - shrinking the testing window significantly.

"The Test Selection Agent intelligently selects the most relevant tests to run based on recent code changes, historical test data, and risk assessments." – BrowserStack

Reduction of Testing Cycle Time

Smart test prioritization dramatically cuts down testing cycle times. Instead of running every test in a fixed order, AI agents focus on those tests that are likely to have the greatest impact, using predictive modeling to guide their decisions. By analyzing commit history, these agents pinpoint areas of the code that need closer attention, eliminating the need to run the entire test suite for minor updates. Some organizations have seen a 40% reduction in overall testing time, with regression testing efforts dropping by as much as 85%. By filtering out redundant tests and zeroing in on high-risk areas, teams can validate critical functionalities early and move on to the next development phase more quickly.

"Instead of running every test in a static order, sophisticated AI agents use predictive modeling to prioritize tests... based on change impact, historical failure rates, or even code dependencies." – PractiTest Team

Adaptability to Dynamic Environments

AI agents are designed to adapt on the fly to changing conditions. Using reinforcement learning, they analyze each test cycle to learn from failure rates, defect types, and root causes. When code changes occur, these agents adjust their priorities dynamically, ensuring that the most relevant tests are executed first while still maintaining comprehensive coverage.

Boost in Test Coverage and Defect Detection

Smart prioritization doesn’t just save time - it also improves test coverage and defect detection. By focusing on high-risk areas first, AI ensures that critical functionalities are validated early. At the same time, it maintains thorough coverage across the application. AI-driven testing has led to a 65% increase in test coverage for some teams. Additionally, AI agents often uncover edge cases and combinations that human testers might miss. In 2025, 35% of software teams adopted AI specifically to enhance test coverage and efficiency. These tools also provide valuable insights into risk patterns, helping teams identify where defects are most likely to surface.

4. Parallel and Real-Time Execution

AI agents are transforming software testing by running multiple tests simultaneously across various environments. By combining this capability with prioritized test selection, these agents streamline processes and drastically reduce the time needed to deliver feedback.

Automation of Manual Processes

AI-driven orchestration takes the hassle out of scheduling and managing test runs. These agents oversee test execution across thousands of environments - whether on virtual machines, containerized setups, or cloud platforms - automatically optimizing hardware usage. Instead of relying on sequential test runs, AI agents analyze code changes in real time and select only the most relevant tests to run concurrently. This not only speeds up feedback loops but also allows virtual testers to work around the clock, delivering results by the next morning.

"You can have an army of virtual testers underneath you that work during the night." – Tal Barmeir, Co-Founder, BlinqIO

Reduction of Testing Cycle Time

The impact of parallel execution on testing speed is massive. AI-driven test execution can cut testing cycles by up to 70% compared to traditional sequential methods. By running comprehensive test suites immediately after code changes, teams get faster feedback, enabling them to fix issues quickly before they affect the broader development process.

Adaptability to Dynamic Environments

AI agents don’t just execute faster - they’re also highly adaptable. They adjust test suites in real time based on environmental changes. For instance, if a feature shows instability, the agents can pause related tests and focus on stable areas instead. They also determine which tests can run simultaneously across different platforms and devices, maintaining consistency even in complex, multi-platform settings. This adaptability eliminates the brittleness often seen in traditional automation, where tests fail when UI elements shift or are renamed.

Improvement in Test Coverage and Defect Detection

Speed doesn’t come at the expense of quality. AI agents expand test coverage by generating edge cases and complex scenarios that human testers might overlook, increasing coverage by up to nine times. By running comprehensive test suites immediately after code updates, they help catch defects earlier in the development cycle. For example, in one financial services application case study, AI-enhanced testing reduced lead time from 45 hours to just 13 hours - a 71% improvement - while boosting defect detection rates from 87% to 95%. Additionally, companies that adopt AI-powered testing frequently report a 50% reduction in testing costs within the first year.

sbb-itb-eb865bc

5. Edge Case and Exploratory Discovery

AI testing agents go beyond running predefined tests - they dive into unscripted workflows and unexpected inputs, uncovering bugs that manual testers or static automation often miss. By using contextual reasoning, they grasp the real purpose of UI elements, which helps them navigate intricate user journeys and adjust as workflows evolve. This ability to explore complements traditional automation by ensuring that even unexpected scenarios don’t slip through the cracks.

Automation of Manual Processes

Exploratory testing has traditionally relied on human intuition, which can be inconsistent and challenging to scale. AI agents tackle this issue by using tools like computer vision and natural language processing to assess software behavior and deduce intent - essentially mimicking human testers but at machine speed. These systems can analyze architectural documents and interface details to automatically create both positive and negative test cases, ensuring all requirements are covered.

Improvement in Test Coverage and Defect Detection

AI-driven testing examines combinations and edge cases that would take humans months to document. They dive into code structures, including conditional branches and complex logic, to systematically identify edge cases and negative scenarios. Using reinforcement learning, AI agents can discover edge cases 2.8 times faster than traditional methods. This approach boosts defect detection rates by 47.2% and identifies 42.7% more edge case defects.

"Generative AI can create tests that humans might miss, especially for edge cases and unusual scenarios." – Trunk.io

Adaptability to Dynamic Environments

AI agents are taking exploratory testing to the next level with dynamic adaptability. By analyzing historical defect patterns and recent code changes, they prioritize high-risk areas in real time. When they find gaps in test coverage, they automatically create new test specifications to address untested scenarios or code paths, using continuous feedback loops. This adaptive process achieves over 95% test coverage and slashes maintenance efforts by up to 70% compared to traditional methods.

6. Visual Intelligence for Defect Detection

AI agents are taking defect detection to the next level by using visual intelligence, a technology powered by computer vision. Unlike traditional methods, these agents don’t just rely on rigid pixel comparisons. Instead, they interpret visual context to catch issues that functional tests often miss. Think of problems like overlapping text, broken layouts, or missing elements - visual AI identifies these by understanding the purpose and appearance of elements on the screen. For instance, even if a button is moved or renamed, these systems can still recognize it based on its role rather than relying on fragile code selectors that break with minor updates.

Automation of Manual Processes

Visual intelligence also eliminates the need for painstaking manual screenshot comparisons across countless browser and device combinations. By analyzing UI states through computer vision, AI agents can detect inconsistencies and free QA teams from repetitive tasks. Unlike pixel-matching tools that flag every tiny difference, visual AI understands context. For example, it can identify a text box as a "login input" based on its relation to nearby labels, rather than its exact position on the screen.

"AI agents use machine learning, computer vision, and natural language processing to autonomously test software applications without constant human intervention or script maintenance." - Martin Koch and Nurlan Suleymanov, aqua cloud

Reduction of Testing Cycle Time

By automating these manual processes, visual intelligence significantly speeds up testing workflows. Companies using this technology have reported a 40% reduction in overall testing time. These systems streamline feedback loops by focusing only on meaningful visual changes, allowing developers to bypass the time-consuming reproduction phase and go straight to fixing bugs.

Improvement in Test Coverage and Defect Detection

Visual AI doesn’t just save time - it also improves the depth and accuracy of testing. It uncovers "invisible" issues like styling glitches, layout shifts, and overlapping elements that functional scripts often overlook. Test coverage can increase by as much as 65%, as these agents explore visual paths that humans might miss. Additionally, self-healing capabilities reduce maintenance efforts by up to 90%, as the agents adapt to changes in the interface. This ensures consistency across different platforms, whether it’s verifying that a web app looks the same on an iPhone 15 or a Samsung Galaxy S24. By integrating visual intelligence, QA teams can boost efficiency and deliver higher-quality results in today’s complex testing environments.

7. Continuous Learning and Optimization

AI agents are designed to get smarter with every test cycle. Unlike traditional scripts that stay the same until someone manually updates them, AI agents rely on reinforcement learning to refine their strategies. They evaluate which tests uncover the most bugs, identify frequently changing code areas, and pinpoint where failures tend to occur. This allows them to shift their focus to high-risk areas while trimming unnecessary or low-value tests from the suite.

Faster Testing Cycles

Thanks to their self-healing and prioritization abilities, AI agents significantly speed up testing cycles. By analyzing historical failure data, they can predict which tests are most likely to fail after a code change and prioritize those. This targeted approach can drastically reduce execution times - some organizations have seen testing cycles become up to 70% faster compared to traditional methods. On top of that, AI agents automate failure analysis and root cause identification, cutting the time engineers spend on manual debugging and triage by as much as 95%. The result? A faster, more efficient feedback loop.

Adapting to Change

One of the standout features of AI agents is their ability to adapt as applications evolve. For example, if a UI element moves or an API endpoint changes, the agent doesn't simply fail - it learns to identify the element based on its function rather than its exact location or code selector. This self-healing capability reduces the need for test maintenance by an average of 64.3%.

Better Test Coverage and Defect Detection

AI agents don't just run tests quicker - they run smarter tests. Through self-learning, they improve test coverage by 7.8% each month. These systems can explore unscripted paths and edge cases that human testers might miss, expanding coverage by up to 9X. With predictive analytics, they can also forecast defect clusters with 76.5% accuracy based on historical patterns, helping teams catch problems before they make it to production. These continuous improvements highlight the game-changing role AI plays in modern QA workflows.

Conclusion

AI agents are reshaping the way teams approach software testing, speeding up test cycles by as much as 70% while improving overall quality. Through features like autonomous test generation, self-healing maintenance, intelligent prioritization, and continuous learning, these tools tackle long-standing QA challenges. As highlighted earlier, AI-driven testing offers faster results, broader coverage, and reduced costs.

But beyond these performance boosts, the move toward autonomous testing emphasizes a balanced approach. Ninad Pathak, Enterprise Marketing Manager at LambdaTest, puts it best:

"AI testing agents, no matter how sophisticated, still need a human in the loop (HITL) to verify findings, test cases, and scripts. AI agents are not here to replace your testing team - they're here to empower your team to be faster and far more efficient."

Human testers continue to play a critical role, bringing strategic thinking and creativity to the table. While AI excels at repetitive, high-volume tasks like regression testing and defect detection, it can't replicate the exploratory creativity or nuanced understanding of user experience that human testers provide. This "human-in-the-loop" approach ensures that speed and quality work hand in hand.

If your QA team struggles with slow cycles or limited test coverage, Rock Smith's AI agents could be the solution. These tools use visual intelligence and semantic targeting to interpret applications much like humans do. They automatically generate test flows in plain English and adapt seamlessly to changes without constant script updates. Whether you're working on a small pilot project or scaling across an entire workflow, these agents help your team deliver faster releases without compromising quality.

FAQs

How do AI agents improve test coverage in software testing?

AI agents are transforming how we approach test coverage by uncovering scenarios that traditional methods often miss. They dive deep into code, user flows, and usage data to automatically create test cases for edge cases, high-risk areas, and paths that might otherwise go untested. What’s more, these agents adjust on the fly to application changes - whether it’s a UI update or a newly introduced feature - eliminating the need for manual updates and ensuring no gaps in coverage.

On top of that, AI agents zero in on what matters most. By analyzing code changes, they focus testing efforts on the areas most likely to be impacted. This smarter, more targeted strategy not only broadens coverage but also keeps the process efficient, helping QA teams deliver faster and more dependable results. And because these agents continuously learn and refine their test cases, they provide testing that keeps pace with the evolving software, ensuring risks are managed and functionality stays reliable.

How do human testers and AI agents work together in software testing?

Human testers and AI agents work together by blending human judgment with automated precision. AI agents take care of repetitive tasks like creating, running, and updating test cases, freeing human testers to concentrate on setting testing objectives, prioritizing high-risk areas, and interpreting intricate test results.

Testers also contribute to improving AI performance by supplying high-quality data, reviewing AI-generated outputs, and refining its capabilities over time. This partnership not only speeds up and streamlines testing but also ensures that human insight is applied to handle complex decisions and verify business logic accurately.

How does self-healing AI minimize test maintenance efforts?

Self-healing AI takes the hassle out of test maintenance by automatically spotting and fixing issues in test scripts when changes occur - like updates to a user interface or modifications to elements. Instead of requiring someone to step in and make adjustments, the AI identifies failures, finds the updated elements, and updates the scripts in real time. This keeps everything running smoothly without the need for manual effort.

What’s more, the AI gets smarter over time. It learns from these adjustments, building a library of patterns and common changes. This allows it to predict and address potential issues before they even happen. By cutting down on the time spent maintaining scripts, QA teams can shift their focus to higher-value tasks like exploratory testing or digging deeper into defect analysis. The payoff? Faster testing cycles, increased efficiency, and reduced costs.